BigQuery has introduced many new features over the Summer months. In this post, we'll briefly overview the five that we find the most exciting which could greatly benefit your data workflows.

1. GROUP BY Array/Struct (August 28, 2024 in Preview)

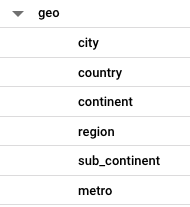

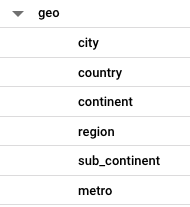

BigQuery added an ability to group by an array or struct. Grouping by these complex data types can simplify queries where you would otherwise have to access individual fields to achieve the same outcome. In the case of GA4 export, for example, we can now GROUP BY the geo field instead of individually specifying each of the six nested fields for a detailed geo aggregation.

SELECT

geo,

COUNT(*) AS events

FROM

`analytics_123456789.events_20240926`

GROUP BY 1

ORDER BY events DESC

All six geo fields are included in the output.

Example of an output

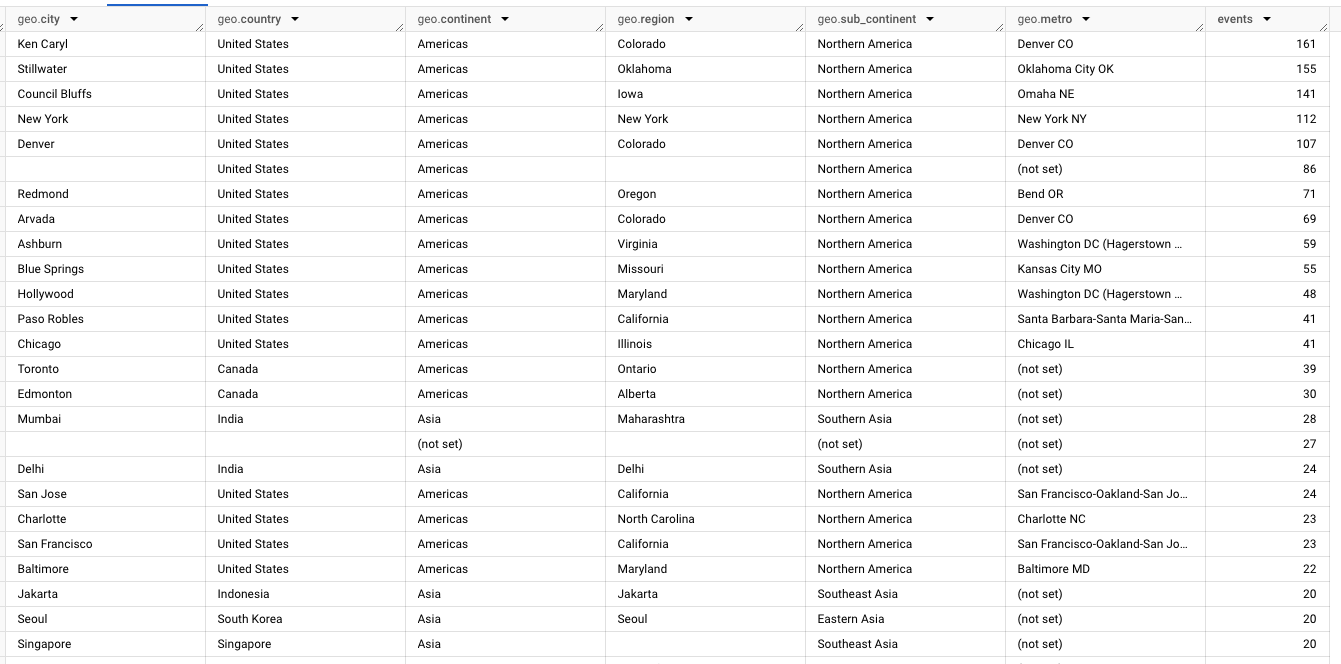

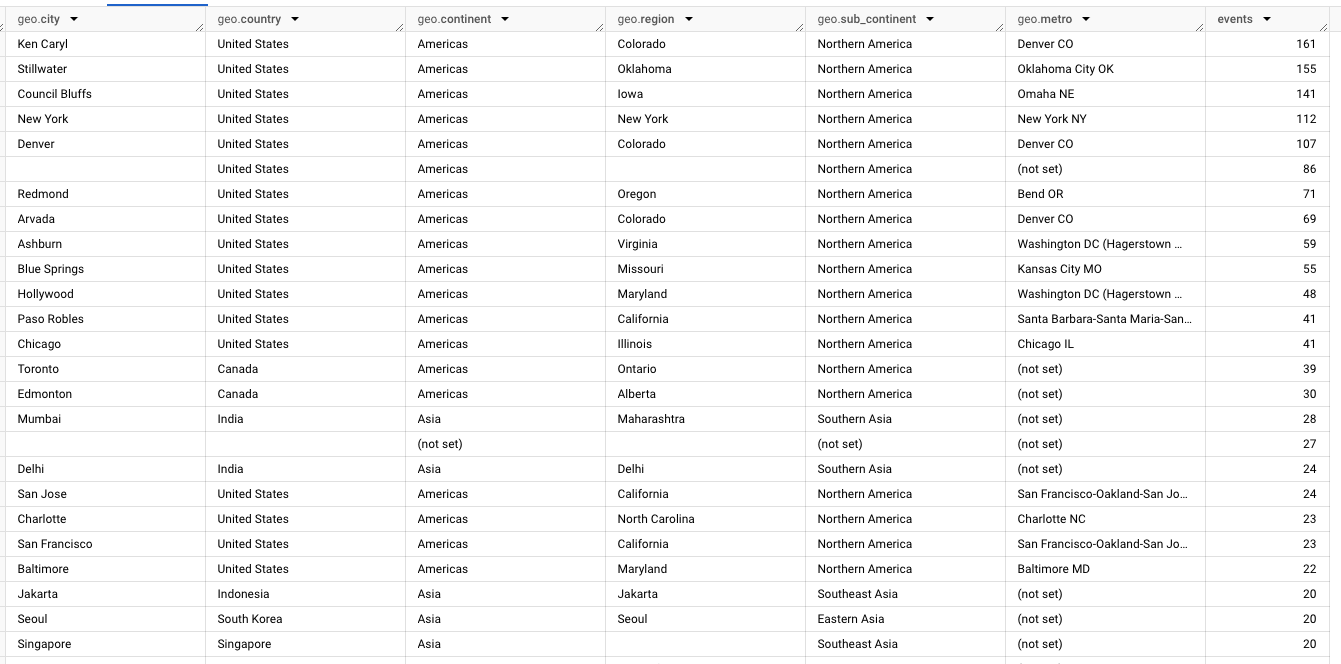

2. Short Query Optimized Mode (August 14, 2024 in Preview)

The short query optimized mode allows the user to run their queries in a lower latency mode, perfect for dashboards and quick interactive queries. If BigQuery determines that running a query in this mode is possible, it will provide the rows of the results considerably quicker but will not create the usual job. Therefore, there will be no jobReference available.

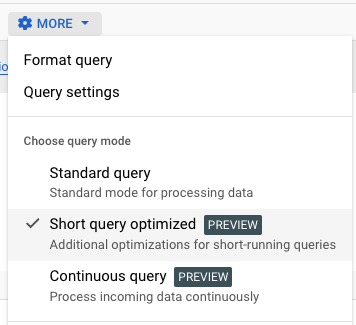

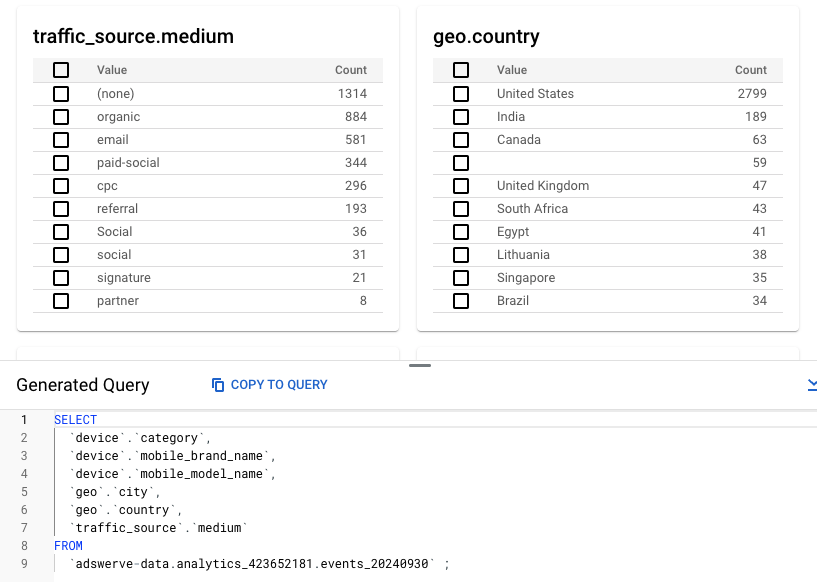

3. Table Explorer (July 25, 2024 in Preview)

Table Explorer gives users a quick overview of the values and distributions in selected fields of individual tables. On top of that, it also provides queries responsible for the outputs to help kick off the explorations.

4. Change History (July 22, 2024 in Preview)

Change History in BigQuery, as the name suggests, allows you to query the history of changes (update, delete, insert, merge, stream...) in any table. You can query up to seven-day history of changes (less if the time travel window has been updated). On the other side the end timestamp value must be at least ten minutes old (changes made in the last 10 minutes are not accessible).

In the example below the query first creates a table (#1), adds new rows to it (#2), and then updates one of the existing rows (#3). In the snippet following the table creation and manipulation, we query the change table and review the outputs.

Create a test table:

#1 Create a new table

CREATE OR REPLACE TABLE explorations.Olympics_CHANGES

OPTIONS(enable_change_history = True)

AS

SELECT "Tokyo" as City, 2020 as year

UNION ALL

SELECT "Beijing" as City, 2022 as year

UNION ALL

SELECT "Paris" as City, 2024 as year

UNION ALL

SELECT "Cortina" as City, 2026 as year;

#2 Add new cities

INSERT INTO explorations.Olympics_CHANGES VALUES ("Los Angeles", 2028);

INSERT INTO explorations.Olympics_CHANGES VALUES ("French Alps", 2030);

#3 Update a city

UPDATE explorations.Olympics_CHANGES

SET year=2021 WHERE City="Tokyo" AND year=2020;

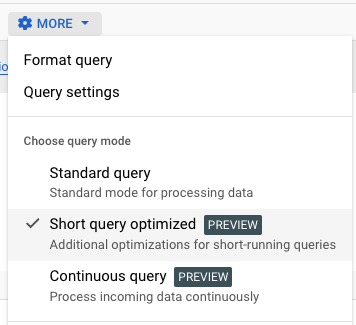

Query for table changes using the CHANGES(TABLE, start_time, end_time) function. If start_time is set to NULL it will look back to the creation of the table, end_time is required to be more than 600 seconds older than the current time.

# REVIEW CHANGES

SELECT

city,

year,

_CHANGE_TYPE AS change_type,

_CHANGE_TIMESTAMP AS change_time

FROM

CHANGES(TABLE explorations.Olympics_CHANGES,

NULL,

TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 11 MINUTE))

ORDER BY change_time

The output of the CHANGES query above.

You can see the 4 initial cities were added at 16:54:01 at the table creation, we then INSERT-ed Los Angeles and French Alps at 16:54:10 and 16:54:12 and after we updated the Tokyo to 2021 (the Olympics were pushed due to Covid-19) at 16:54:24. The update also removed the original Tokyo 2020 from our table.

5. Continuous Queries (July 22, 2024 in Preview)

Continuous queries are one of the biggest new feature of BigQuery in recent history. Continuous Queries provide you with the ability to listen for and stream/forward table inserts using SQL in real-time. Any data written to a table (BQ Storage Write API, streaming, batch load or INSERT) can be pushed to a new BQ Table, a Pub/Sub topic or Bigtable.

In the example below we use a SQL query that filters by an event name "blog_view" and selects some of the basic dimensions from the real-time Google Analytics 4 export to BigQuery from our website. The continuous query then pushes that data as a message to a Pub/Sub topic.

EXPORT DATA

OPTIONS (

format = 'CLOUD_PUBSUB',

uri = 'https://pubsub.googleapis.com/projects/adswerve-data/topics/blog-readers')

AS (

SELECT

TO_JSON_STRING(

struct(

(SELECT value.string_value

FROM unnest(event_params)

WHERE key = "page_location") as page_location,

user_pseudo_id,

device.operating_system,

session_traffic_source_last_click.manual_campaign.medium)) as message

FROM `adswerve-data.analytics_423652181.events_intraday_20241002`

WHERE event_name = "blog_view"

)

The result: seconds after opening the following page on our blog "https://adswerve.com/blog/ga4-bigquery-tips-guide-to-attribution?continous_queries=testing" I am able to pull the message from a Pub/Sub topic.

Adswerve provides BigQuery consulting and training for teams around the world. To learn more, don't hesitate to get in touch with us!